Project Coordinator: Assoc. Prof. Mustafa Mert Ankaralı

Project Type: TUBITAK 1005

Project Budget: 1.000.000 TL

Project Duration: 18 Months

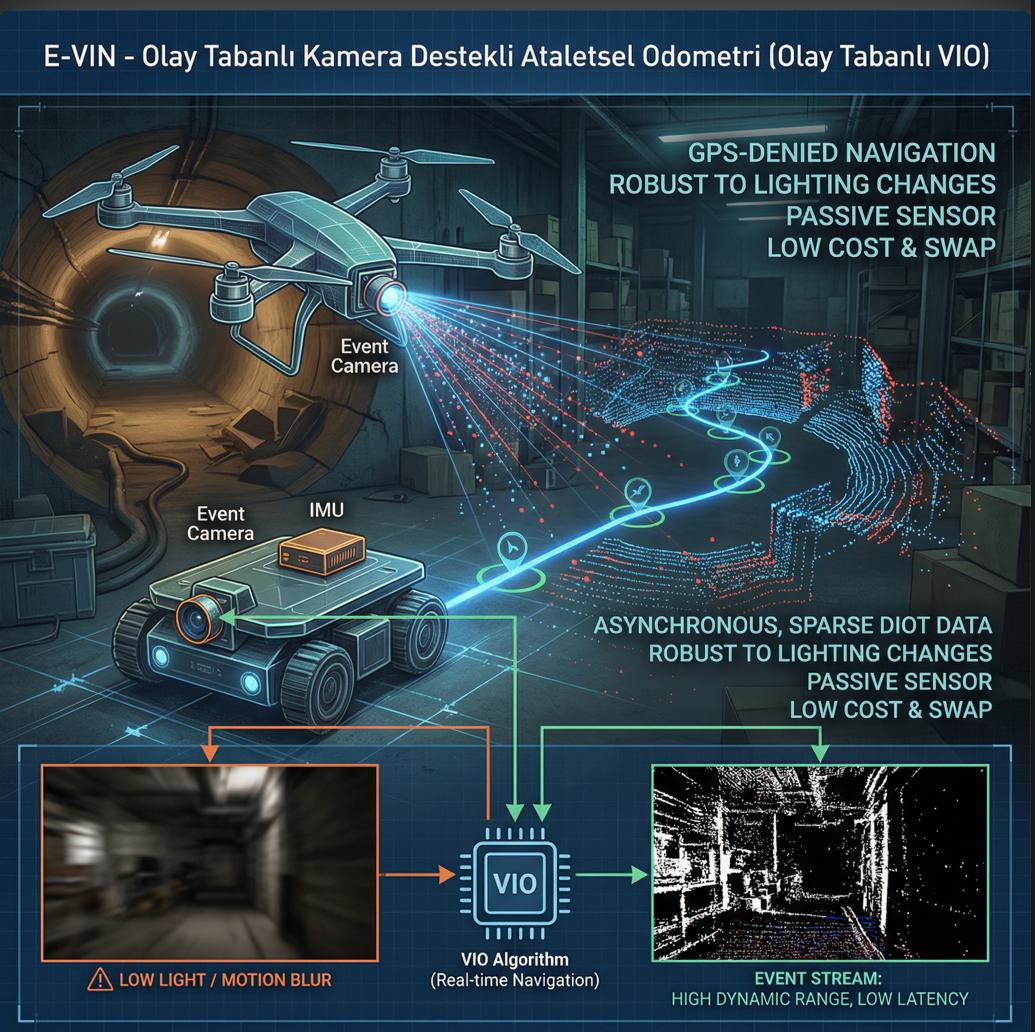

This project aims to develop a next-generation event-based camera–assisted visual–inertial odometry (Event-based VIO) system for autonomous platforms operating in environments where GPS is unavailable or unreliable. Conventional navigation systems based on RGB cameras and LiDAR sensors suffer from critical limitations such as sensitivity to low-light conditions, sudden illumination changes, high cost, weight, and energy consumption. Inspired by biological vision mechanisms, event-based cameras overcome these challenges by asynchronously capturing only brightness changes in the scene, providing a high dynamic range, ultra-low latency, and robustness against motion blur.

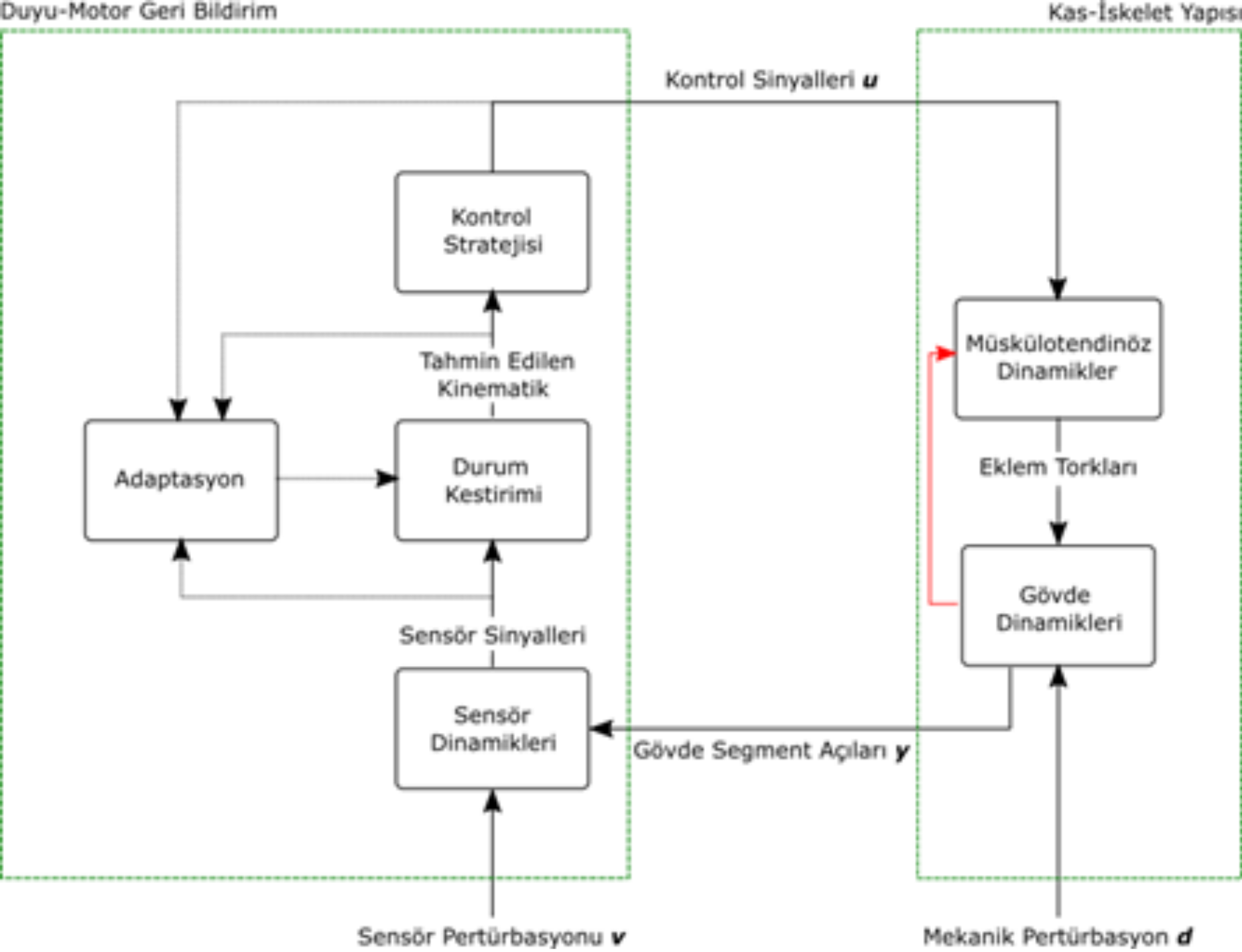

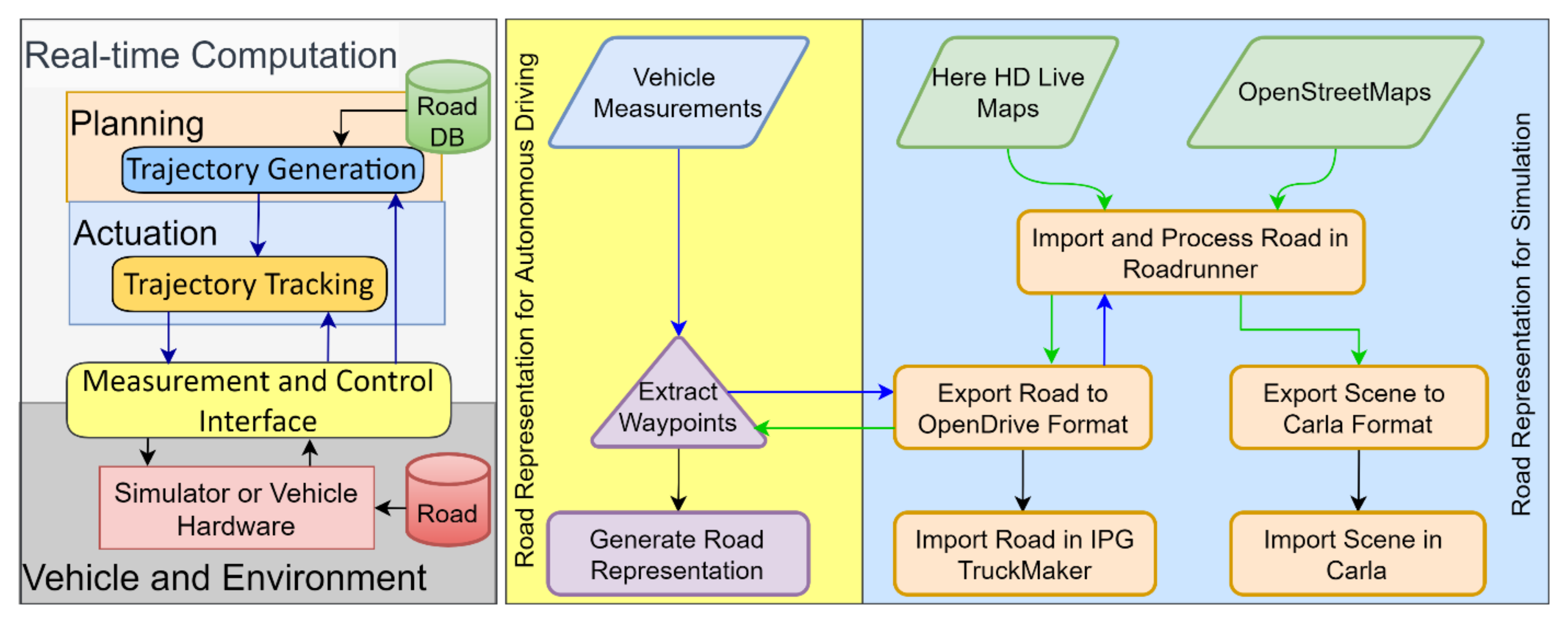

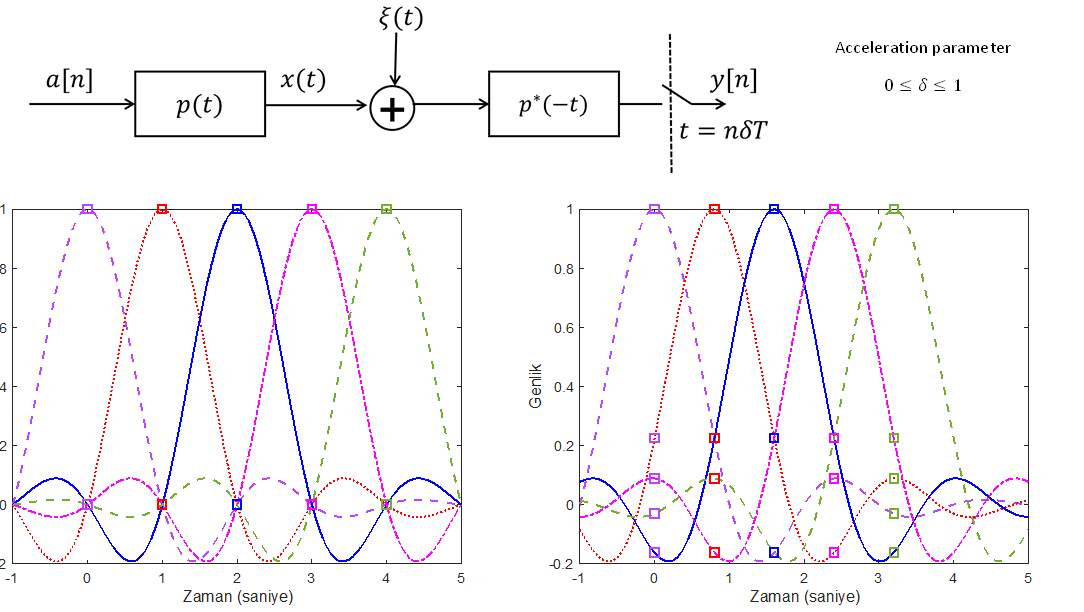

Within the scope of the project, an original real-time event-based VIO algorithm will be developed by tightly integrating data from an event-based camera and an inertial measurement unit (IMU). The study will address event data interpretation, sensor calibration, data fusion, and pose estimation using both model-based and learning-based approaches. The proposed system is expected to deliver stable and long-term localization performance under challenging conditions such as low illumination, high-speed motion, and GPS-denied environments.

The resulting modular, lightweight, and cost-effective navigation solution is anticipated to offer significant added value for applications in defense technologies, autonomous ground and aerial vehicles, wearable systems, and mobile robotics. By integrating event-based vision into navigation systems, the project aims to fill an important gap in the current literature and enhance national technological capabilities in next-generation autonomous navigation technologies.